The enthusiasm for GPT-3 is exciting, worrying and a bit sad.

It's exciting because technology is advancing, and people are finding new things to do with it.

It's worrying because very smart interpreters of technology are seeing GPT-3 as a sign of imminent artificial general intelligence, AGI - which reveals too little attention to what intelligence is, and how or if computers might get it.

GPT-3 is as much evidence of machine intelligence as a mirror, or a radio, is evidence of machine intelligence. What GPT-3 is revealing, or rather reflecting, instead is the vastness, depth, and diversity of human intelligence.

When you look in a polished mirror, you see and understand the world reflected back to you, in as much detail and meaning as your eyes and mind can resolve.

But is a mirror a machine? Is it smart? That would be a very strange conclusion: not only is the mirror not 'doing' very much at all, the real work, the work of seeing and understanding, is being done by the ... the human looking in the mirror.

What is very worrying about the GPT-3 enthusiasm is the misunderstanding of how it generates valid output. It doesn't do it through any a priori knowledge of the world, or any synthesis of core principles, or any evolutionary reflection that might analogize to human thought. It just reflects back what it has been successively told are the best bits of human intelligence.

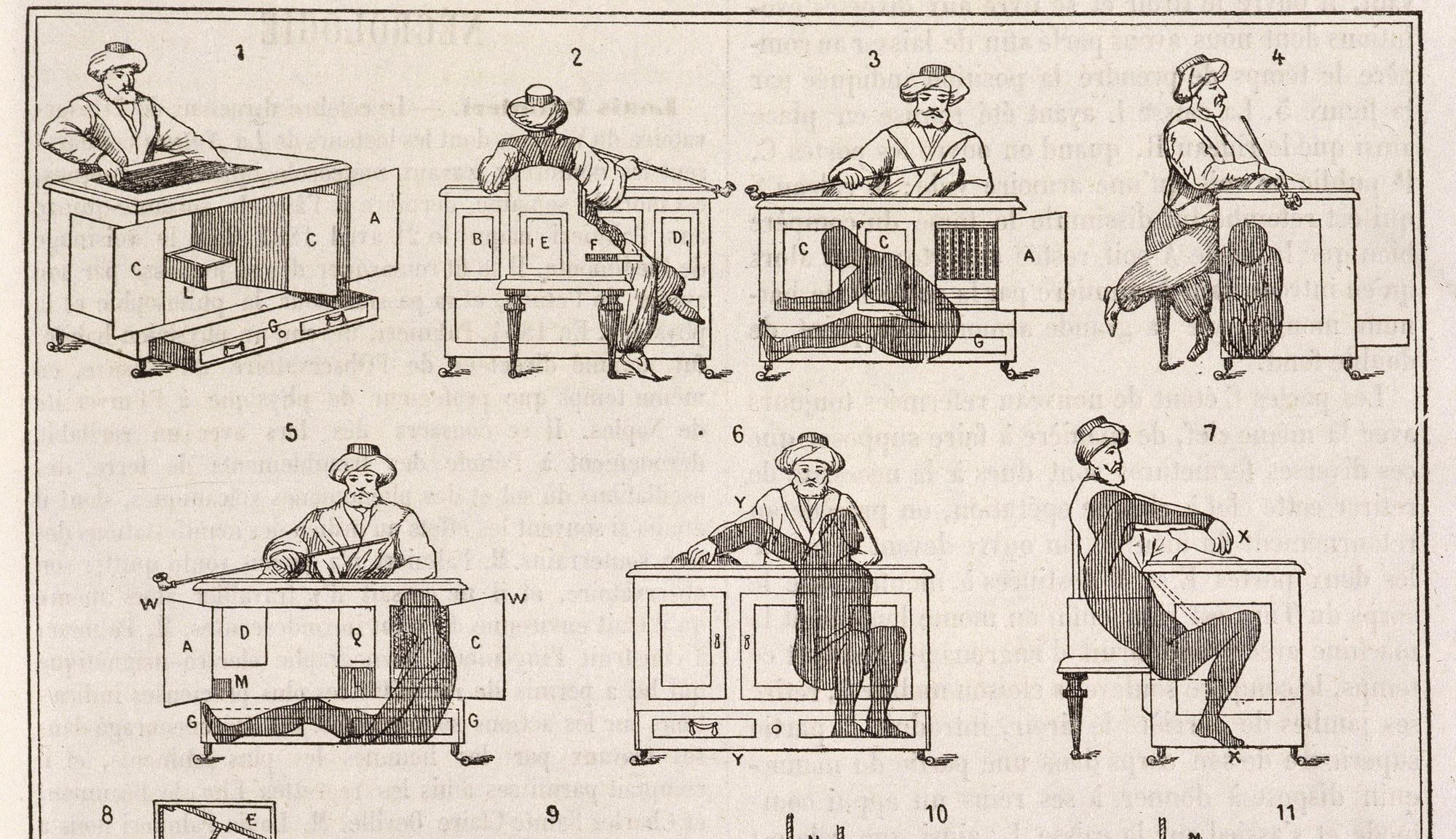

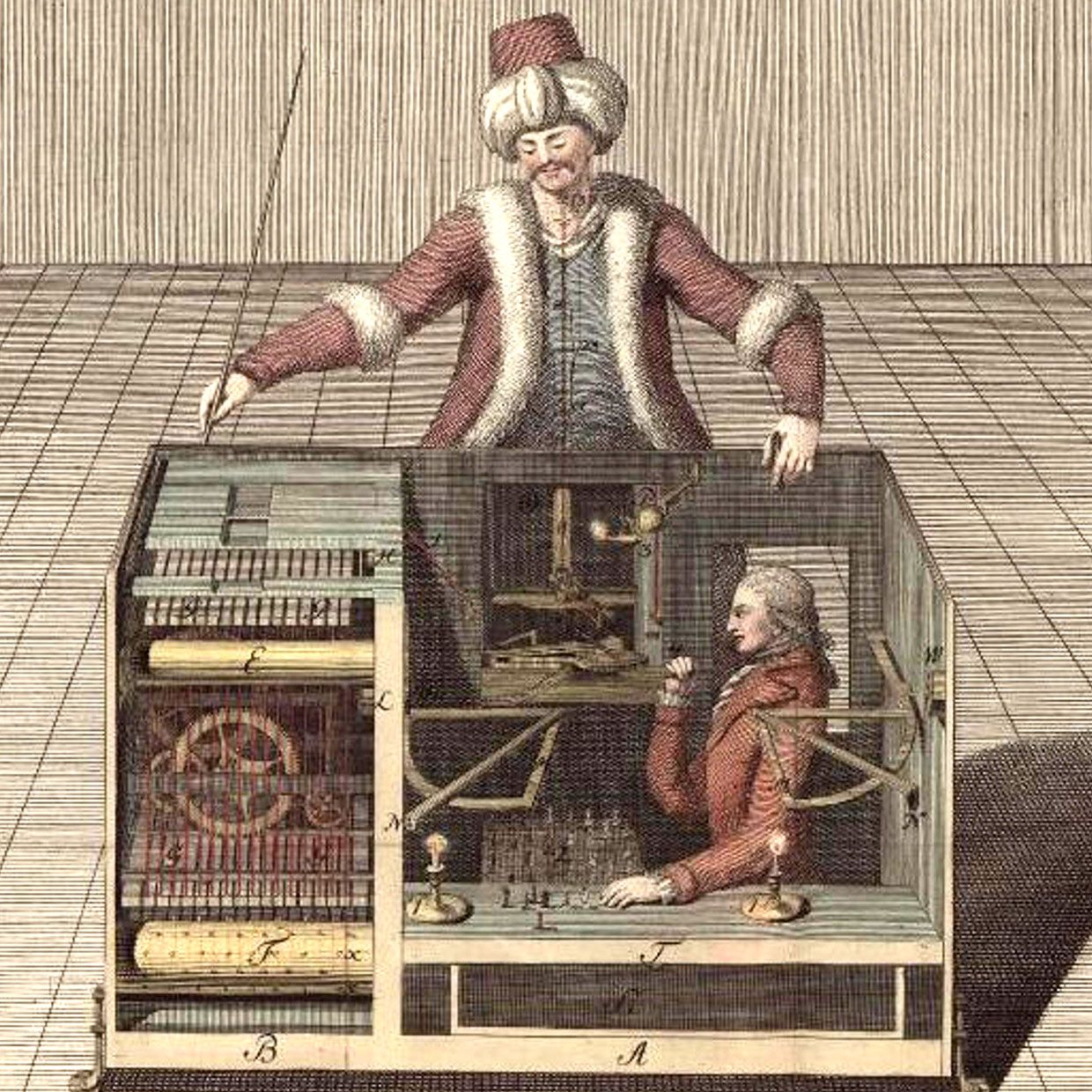

What people are seeing, when they watch GPT-3 'code' or 'draw' from natural language inputs is the miracle of ... watching human capacity being synthesised. The output is direct transfer of pre-existing human intelligence. This is the Digital Turk.

Would we say that a robot had learned to cook, or developed a taste for quality food, if it could generate perfect new recipes by accurately synthesising the weights, measures, ingredients, cooktimes, of all previous cookbooks - but had never baked or eaten a cake? No. We would be - we are - watching a cataloguing exercise, not a Michelin star in the making.

Mirrors, on first sight, are fascinating and profound for human culture, and interesting and useful under continuous use. Einstein included a mirror in many of his thought experiments, as a technical and conceptual aid to science progress. But he didn't claim it contained any special essence, or insight, or cognitive capacity.

When GPT-3 reflects back synthesis of human intelligence, it's like the beginnings of mirrors as a social phenomenon and as technology. We learn things about ourself, we bring features in and out of focus. Progress is truly happening. But in doing so, mirrors aren't becoming smart: humans are. We have found another level of the radiant human cognition, not a machine with mind.

Why people want to see this as the beginning of non-human intelligence, rather than a deepening of human intelligence is incomprehensible to me. Other than noting that maybe all technology and science is really, subliminally, evidence of the human race's urge to understand itself as much or more than the environment.

Where machine intelligence would begin to be in evidence, would be in the iterative refinement of knowledge by computers without human involvement. GPT-3 is not this, and this is for the inverse reason: if a computer tried to show us a non-human intelligence, how would we know what we are seeing?

This is a reason why GPT-3 hype is sad: not only is it mistaking the synthesis and reflection of human intelligence for machine intelligence, it is missing the opportunity to discover what a real machine intelligence might be like.

The collapse of credibility in this enthusiasm is in part based on the lack of an explicit meta-model which can be interrogated. GPT-3 is not even at Turing Test levels of coherence, if you were to try to 'ask it' why it made the choices it made.

But the failure to understand GPT-3's relevance is more at the very foundations of intelligence, which enthusiasts of AI and AGI absolutely refuse to engage with: the nature of agency, identity, will, autonomy. These are concepts that are distinct from information processing, they are the realm of the subjective, and as such hard to convert to objective paradigms.

But without them, it's impossible to see how computational cataloguing and synthesis tools, that cannot be interrogated at one level of remove - why did you make this choice, what does it mean, what do you think without reference to a prior catalogue - are close, or even have anything to do with, human intelligence.

Computational linguistics, of which GPT-3 is a part, has not only not destroyed the value of Chomsky's initial questions - how do we know so much from so little, and how can we communicate it so well? - they have made them a shining mountainside of enquiry that we aren't even bothering to climb any more.

When Marconi made the first radio transmissions, the sense of magical breakthrough was not that he had contacted another, new technical species: rather that he had contacted another human. The ghost in the machine is us.